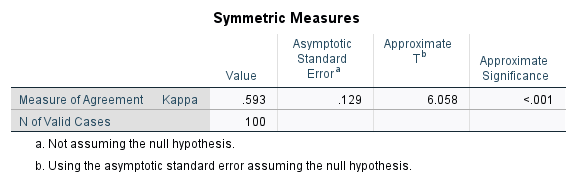

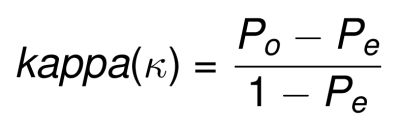

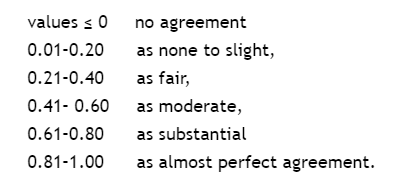

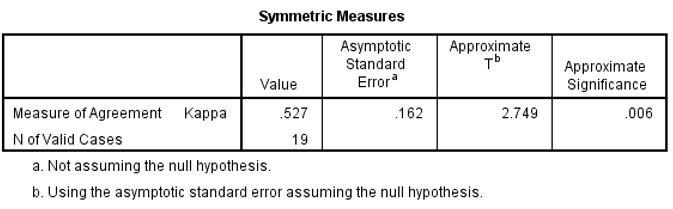

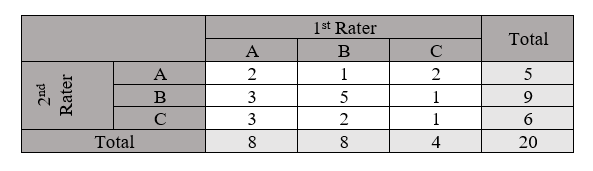

Understanding the calculation of the kappa statistic: A measure of inter-observer reliability Mishra SS, Nitika - Int J Acad Med

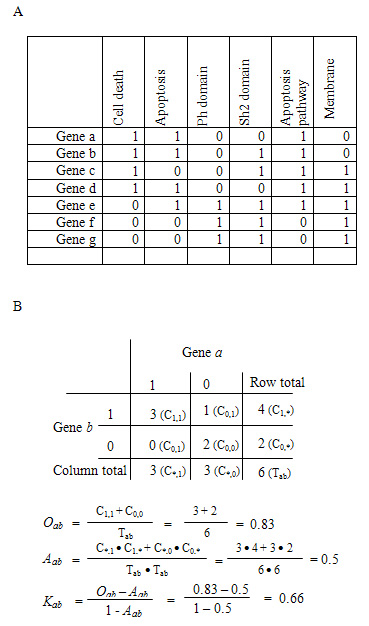

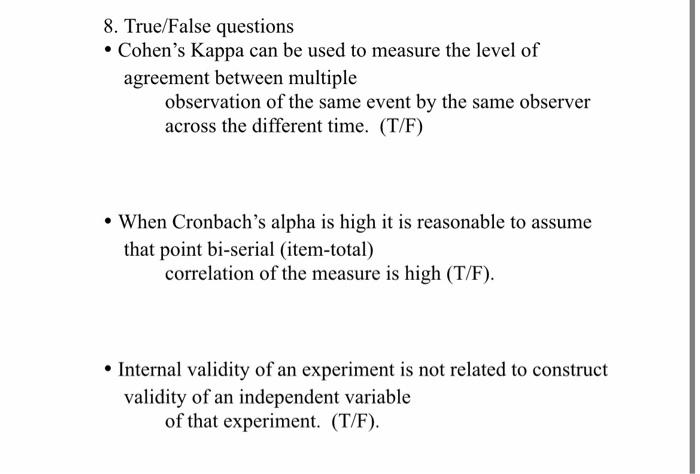

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

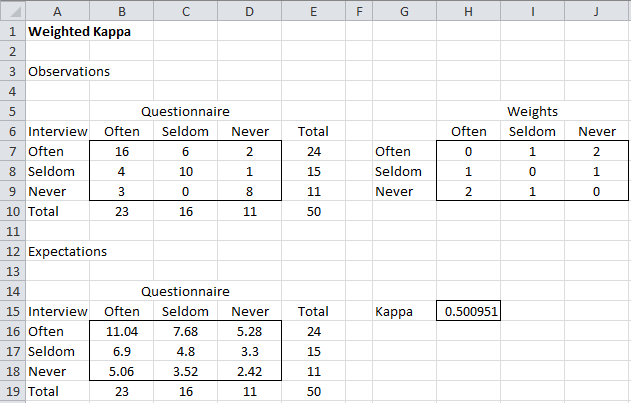

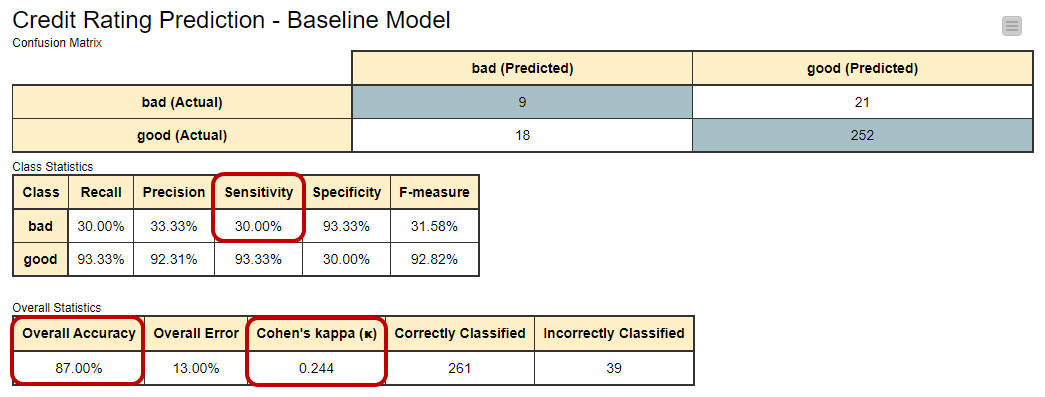

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium